NVIDIA is going a step further in Large Language Model (LLM) development by announcing the new NeMo GuardRails feature that will hopefully bring chatbots back into a more “controlled” and “lawful” behavior.

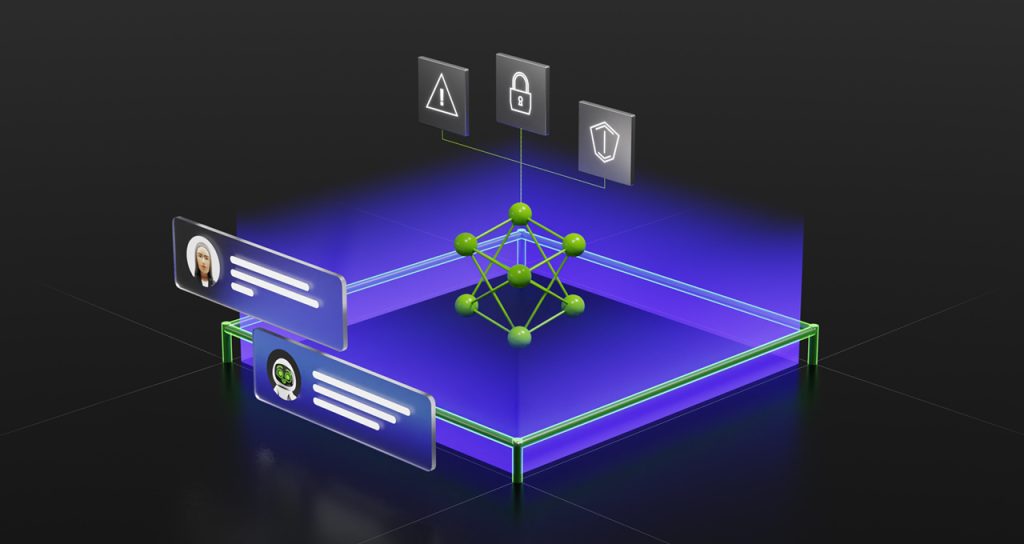

With the year 2023 being the year of AI being used in all sorts of applications, it is important to set boundaries for these self-learning models in terms of what they can access or deduce. As such, the NeMo Guardrails come in to ensure applications powered by LLMs are accurate, appropriate, on topic, and secure. Things such as ChatGPT, Bard, or whatever “smart assistant” that comes out this year, can all introduce Nemo Guardrails into the application through 3 separate sets of guardrails which are:

- Topical Guardrails – Prevents apps from going off-topic and steering into undesired areas. Therefore, no food topics when you consult about the weather

- Safety Guardrails – Ensures apps respond with information that is accurate and appropriate. Additional filtering of unwanted language and the enforcement of references through credible sources only can be applied

- Security Guardrails – Restricts apps to only make connections with external 3rd-party applications that are verified

Such rules can be modified to one’s specific use and by technically anyone since NeMo Guardrails is fully open source.

Aside from that, NVIDIA is also adding it into its own NVIDIA NeMo framework while being available as a service either through GitHub for cost-free modification or as a complete and officially supported package with expert guidance a part of the NVIDIA AI Enterprise software platform.

For the nerdy gang that wants to learn what’s under the hood, check out the technical blog for the NeMo Guardrail feature.