Over at the NVIDIA@GTC 2022, CEO Jensen Huang has finally revealed the successor of the Ampere architecture. Please welcome, Hopper.

With how much benefit can be generated through AI computing, it is inevitable that the data center industry will move into full-scale machine learning-powered infrastructure to meet modern-day digital data processing. Therefore, the Hopper architecture delivered through the first-ever product, the NVIDIA H100 GPU, is expected to perform some of the greatest achievements the world has yet to see.

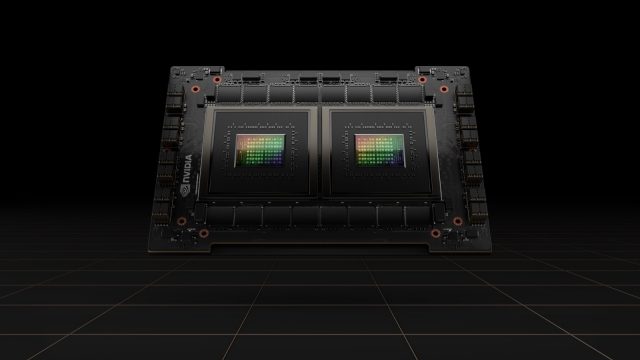

For starters, the actual physical level is already off the charts with 80 billion transistors baked into each complete GPU thanks to TSMC’s 4N process that is capable of delivering a near 5TBps external communication speed with extensive PCIe Gen 5 support. Not only that, but it is also the first one to utilize the HBM3 memory with 3TBps bandwidth and for scale, deploying just 20 units of the H100 GPU is enough to sustain the entire’s world internet traffic which is a bonkers amount of efficiency. Raw data moving tech aside, the H100 accelerator’s new dedicated Transformer engine will be the lead cast in pushing the capabilities of natural language processing algorithms via speeding up such networks as much as 6 times faster than previous generation offerings.

In terms of virtualization, this new GPU can do a much better job as well because it now can be split into 7 equal fully isolated instances to handle completely different jobs at once thanks to the 2nd-Generation Secure Multi-Instance GPU doing the partitioning job cleverly. As much as the world is concerned with cybersecurity, the H100 will be following while revolutionizing the industry by protecting AI models in addition to data so that the actual powerful software behind the calculations doesn’t get exposed easily. Additionally, because of the nature of H100 created for large-scale deployment, the introduction of the 4th-Generation NVLink is definitely within the equation as well via the NVIDIA HDR Quantum InfiniBand connecting up to 256 units of said GPU with 9x more bandwidth.

Let’s take a look at another aspect of harnessing the H100 through the new DPX instruction set used for accelerating dynamic programming that includes a broad range of algorithms for things like route optimization for autonomous robot fleets and genomics-exclusive Smith-Waterman, all of these are the beneficiaries of NVIDIA’s AI inferencing powered by the Hopper architecture. Optimized for industry deployment, the 4th-Generation DGX system, the DGX H100, will be featuring 8 GPUs for an astounding 32 petaflops of AI performance at new FP8 precision where lots of cloud service providers like Alibaba Cloud, Amazon Web Services, Baidu AI Cloud, Google Cloud, Microsoft Azure, Oracle Cloud, and Tencent Cloud are all ready to offer H100-based instances.

With all these being said, we’ll just be ourselves and wait for the release of the RTX 40 series carrying the rumored “Ada Lovelace” GPU, or if you’re on a budget, quickly grab an Ampere card when there’s still time.