NVIDIA’s latest announcements carry one simple theme – Generative AI is ready for everyone.

AI-Ready servers are, uh, ready

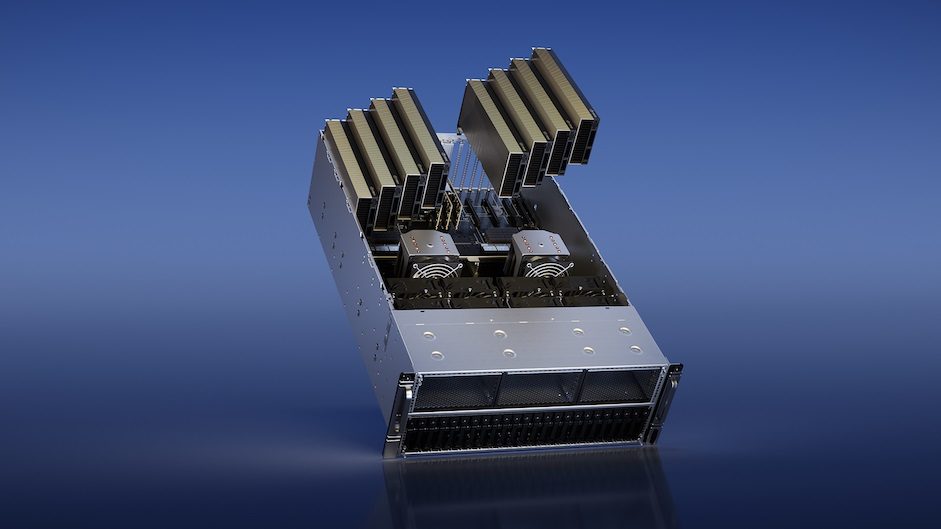

To start, partners across the world will start accepting orders for industrial deployments of AI-ready servers that pack the new L40S GPU, BlueField-3 DPU, and NVIDIA AI Enterprise software into a single system for those who want to privately own all of this powerful hardware to harness the power of generative AI applications.

Brands like ASUS, Dell Technologies, HP Enterprise, Lenovo, and more – will ship completed products by year-end as reported.

Designed to cover all grounds of full-stack accelerated infrastructure, the beastly performance is key to helping industries race to adopt generative AI for a huge range of applications that can benefit mankind on a huge scale like drug development and fraud detection, all the way down to pure business use like retail product descriptions and intelligent virtual assistants.

In terms of the hardware, the L40S GPU is generally 1.7x stronger than the A100 with 1.2x more generative AI inferencing power while the BlueField DPUs handle everything else by accelerating, offloading and isolating other general compute load with ConnectX-7 SmartNICS offering ultra-low networking latencies between systems.

NVIDIA x VMWare for Generative AI

As for the second part of the announcement, Team Green and VMWare will be launching a new foundation called ‘VMware Private AI Foundation with NVIDIA’ (Yes, very straightforward indeed).

This partnership aims to bring the power of cloud computing to match the demands and needs of generative AI served by the strong infrastructure of VMWare Cloud Foundation with the help of accelerated computing from NVIDIA.

As a result, they are looking forward to enterprises starting to produce secure private models for their internal usage by leveraging critical or sensitive data from respective businesses in order to train a model that knows what it is doing and is doing right.

With a wide choice between NVIDIA NeMo or Llama 2 and perhaps in the future, companies will be able to skip that initial heavy hardware investment if they are new to this field and then scale as much as they need with a simple plan upgrade.

The virtualized environment also means that pooled resources can be shared almost equally and balanced or efficiently at the very least.

Since this is a partnership deal with NVIDIA, that means the end-to-end, cloud-native framework included in NVIDIA AI Enterprise, NVIDIA NeMo, will be made accessible as well. With tons of customizable frameworks, guardrail toolkits, data curation tools, and pre-trained models, these are the fast lanes to hop onto the generative AI train.

The platform will feature NVIDIA NeMo, an end-to-end, cloud-native framework included in NVIDIA AI Enterprise — the operating system of the NVIDIA AI platform — that allows enterprises to build, customize, and deploy generative AI models virtually anywhere. NeMo combines customization frameworks, guardrail toolkits, data curation tools, and pre-trained models to offer enterprises an easy, cost-effective, and fast way to adopt generative AI.

Availability-wise, both parties are expecting an early 2024 release.