Intel’s Innovation 2023 big tech exhibition concluded last week and here’s a quick recap of the important stuff that will somehow affect normal consumers in the long run.

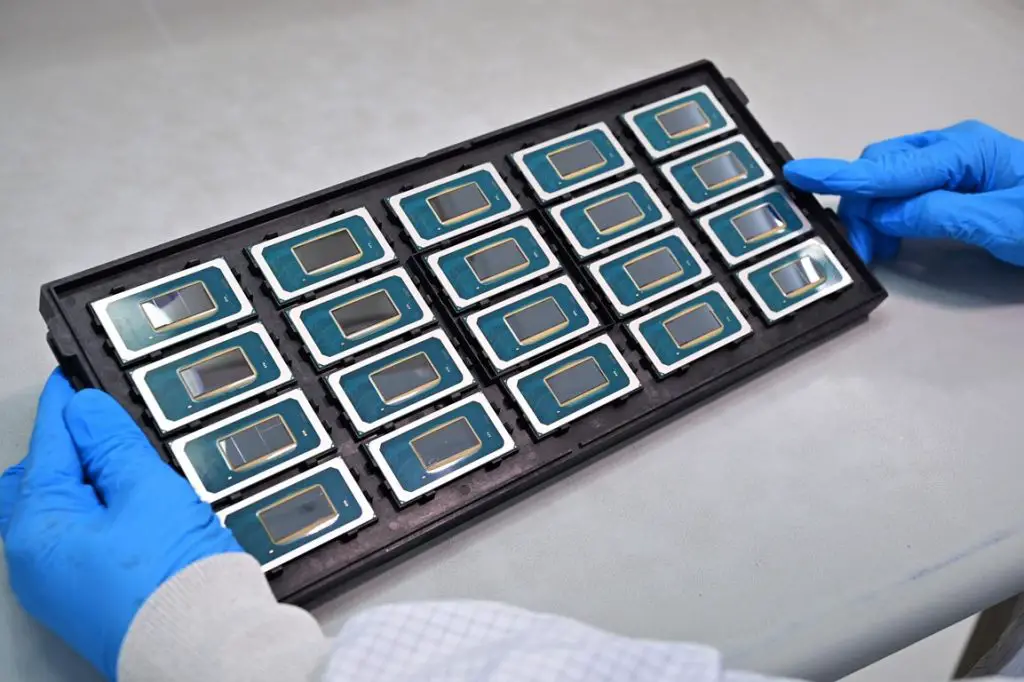

New packaging and multi-chiplet solutions

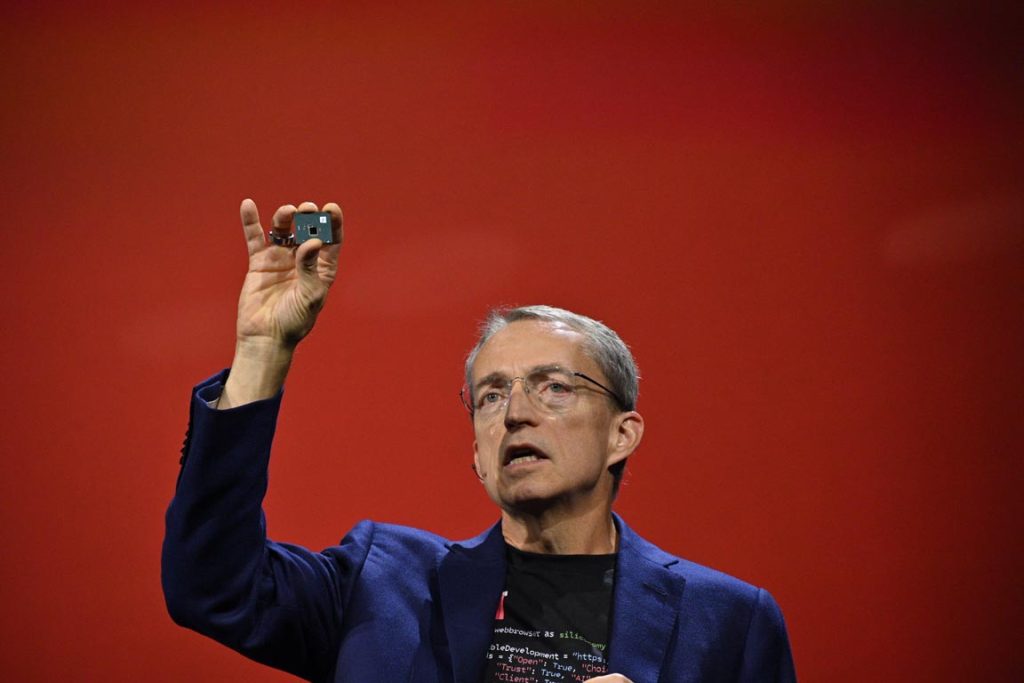

CEO Pat Gelsinger first started off with a quick update on their fabrication process – Intel 7 is now in high-volume manufacturing, Intel 4 is ready but active in manufacturing, and Intel 3 on track for the end of 2023.

But to push everything even further, Team Blue had a breakthrough via glass substrates and is confident that Moore’s Law will still be applicable beyond 2030 with the help of the material enabling scaling of transistors within a package.

Multi-chipset solution-wise, the test package built with Universal Chiplet Interconnect Express (UCIe) has been showcased as well. For the unaware, UCIe aims to push for wide adoption through open standards that can integrate various vendor’s chips together for the goal of diverse AI workloads.

Chonky 288-core 5th Gen Xeon CPU

As one of the major forces to drive AI computing through the “CPU route”, they dropped the first big bomb in the form of an upcoming AI supercomputer built exclusively with Xeon processors and 4,000 Gaudi2 AI hardware accelerators.

It is then followed up by the preview of 5th Gen Intel Xeon processors or “Sierra Forest” E-core focused chips that play the efficiency game on the server scale launching this December 14.

It is expected to deliver 2.5x better rack density and 2.4x higher performance per watt over 4th Gen Xeon which goes as high as 288 cores.

As a result, data centers running less compute-intensive tasks on a super large scale will be able to enjoy much more output in this generation.

Granite Rapids, the P-Core counterpart to Sierra Forest, is gunning for the performance chart, stated at 2x – 3x better AI performance than last-gen.

As for what’s coming in 2025, E-Core Xeon “Clearwater Forest” will be fabricated on the Intel 18A process node but that’s all they announced for now.

Gelsinger spotlighted the range of AI technology available to developers across Intel platforms today – and how that range will dramatically increase over the coming year.

Intel Core rebrand with extra AI juice

Pretty sure you’ve already heard Intel is dropping the “i” thing in the Core CPU lineup in favor of just Core and Core Ultra.

Back then we only knew that Core Ultra would most likely group the unlockable chips into one section while the other doesn’t but it seems there’s more in the background.

The 14th Gen Intel Core Processors codenamed “Meteor Lake” will be getting an integrated neural processing unit (NPU) for power-efficient AI acceleration and local inferencing on a standard consumer-grade PC.

The Foveros packaging technology-made chips will launch on December 14 with desktop counterparts coming next year (Yes, Meteor Lake is the mobile department).

Other nitty-gritty things

Here’s a quick point-form summary of other stuff that may or may not pique your interest as an average IT consumer.

- Intel Developer Cloud is now generally available for all clients with all the latest computing hardware accessible in cloud computing packages

- In-house AI inferencing and deployment runtime OpenVINO updated and released over at OpenVINO.ai, allowing more developers to optimize standard PyTorch, TensorFlow or ONNX models with better model compression tech, improved GPU support and memory consumption, as well as full support for the upcoming Meteor Lake Core Ultra CPUs.

- Intel Trust Authority, a new service in software security portfolio, offers unified yet independent assessments of trusted execution environment integrity and policy enforcement. Available for all sorts of platforms like multi-cloud, hybrid, on-premises and at the edge as log as Intel confidential computing is deployed for the sake of protected AI computing

- Collaboration with software vendors Red Hat + Canonical + SUSE in making sure their offerings complies and fully supports the latest Intel archtiectures

- Kubernetes gets Auto Pilot in terms of pod resource rightsizing enabling automatic and optimized capacity management

- Intel Granulate adds autonomous orchestration capabilities for Databricks workloads for better cost reduction and faster processing time with no code changes

- Plans for an Application-Specific Integrated Circuit (ASIC) accelerator dedicated to processing encrypted data without decryption for absolute secure computing is in the works