Over at the COMPUTEX 2022 event, GPU giant NVIDIA not only has great news relating to standard consumers like you and me, but they also prepared some updates and roadmaps for the industry market to help partners and clients transition into the upcoming generation of high-performance computing. Let’s take a look at what are those.

Also, the info is brought to you by the experts from the various departments of Team Green.

- Ian Buck, Vice President of Accelerated Computing

- Brian Kelleher, Senior Vice President, Hardware Engineering

- Ying Yin Shih, Director of Product Management, Accelerated Computing

- Michael Kagan, Chief Technology Officer

- Deepu Talla, Vice President of Embedded and Edge Computing

- Jeff Fisher, Senior Vice President of GeForce

Grace and Hopper’s big debut in Taiwan

The first big news involves several Taiwan-based computer makers closing on the release of the very first systems powered by the NVIDIA Grace CPU Superchip and Grace Hopper Superchip targeted towards highly-performant digital twins, cloud graphics, cloud gaming, and general computing.

Given an expected availability period of 1H 2023, there will be a dozen selections offered by ASUS, Foxconn Industrial Internet, GIGABYTE, QCT, Supermicro, and Wiwynn where customers can pick the most suitable option that can be seamlessly integrated into existing x86/Arm-based servers.

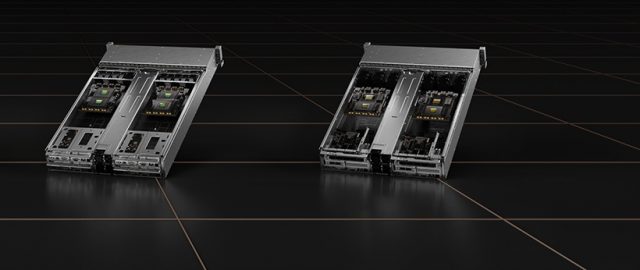

For some recap and context, the Grace CPU Superchip features a dual CPU chip setup, connected coherently via an NVLink-C2C interconnect that hosts up to 144 high-performance Arm V9 cores with scalable vector extensions and a whopping 1TBps memory subsystem. Such configuration enabled results that are never seen before such as double memory bandwidth and energy efficiency while breaking the performance charts at the same time.

On the other hand, the Grace Hopper Superchip rounds up an NVIDIA Hopper GPU with a single Grace CPU connected with the same NVLink-C2C interconnect, hence the name “Grace” “Hopper”. For this particular combo, it is better at tackling HPC and AI-related applications at a giant scale. According to internal data, the duo can transfer data 15 times faster than a traditional CPU + Hopper GPU pairing.

Relating to the topic of ready-to-deploy solutions, the Grace CPU Superchip and Grace Hopper Superchip server design portfolio will come in one-, two-, and four-way configurations across 4 workload-specific designs such as

- NVIDIA HGX Grace Hopper systems running Grace Hopper Superchip + NVIDIA Bluefield-3 DPUs

- NVIDIA HGX Grace Systems running Grace CPU Superchip + Bluefield-3 DPUs

- NVIDIA OVX systems running Grace CPU Superchip + Bluefield-3 DPUs + NVIDIA GPUs

- NVIDIA CGX systems running Grace CPU Superchip + Bluefield-3 DPUs + NVIDIA A16 GPUs

A full set of in-house software support including Omniverse, RTX, NVIDIA HPC, and NVIDIA AI are all optimized while 3rd-party offerings will have more chances to get the NVIDIA-Certified Systems verification thanks to the expansion of the program.

Large scale green GPU computing with liquid-cooled NVIDIA A100

The demand for green computing aimed toward global-scale organizations is trending towards an increase year after year and data centers that hold and process data are practically the backbones of the modern Internet but running these exascale infrastructures is no easy task, especially if one wants to account sustainable computing into the equation.

Here’s where NVIDIA is taking a step into providing mainstream servers the choice to opt into a liquid-cooled A100 PCIe GPU with the first adopter being Equinix, a US-based global service provider, launched a dedicated facility to pursue advances in energy efficiency.

Even when comparing the conventional air-cooled setup, a simple switch from CPU-only servers to GPU-accelerated systems in the case of AI and HPC around the world can save a whopping 11 trillion watt-hours of energy a year. The number is too big to understand? Approximately the power consumption of 1.5 million households for 1 year. Now you get it.

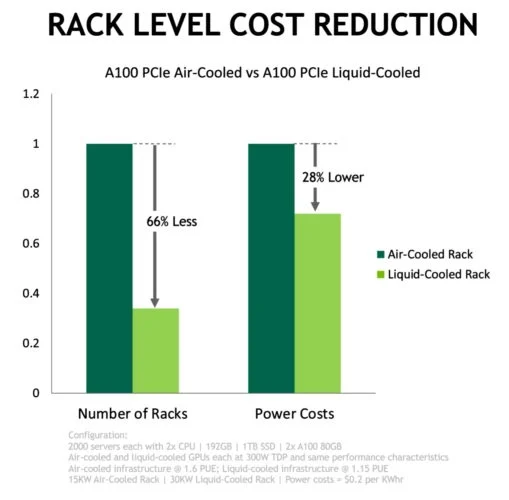

While on paper, the advantage of the liquid-cooled A100 PCIe GPU can be easily seen as a rough 30% lower energy consumption are calculated, the nature of the cooling method allows the GPU to occupy only 1 PCIe slot while the standard air-cooled models use 2. So, you can essentially pack twice as much computing power into the same space.

As of now, Equinix is qualifying and finalizing these A100 80GB PCIe Liquid-Cooled GPU for deployment while general availability is expected to hit the shelves beginning this Summer.

Brand new Jetson AGX Orin servers and appliances by NVIDIA partners

Aside from NVIDIA’s own internal projects, its partners that reach above the number of 30 are going to release new Jetson AGX Orin production systems to take what Team Green is doing to the next level.

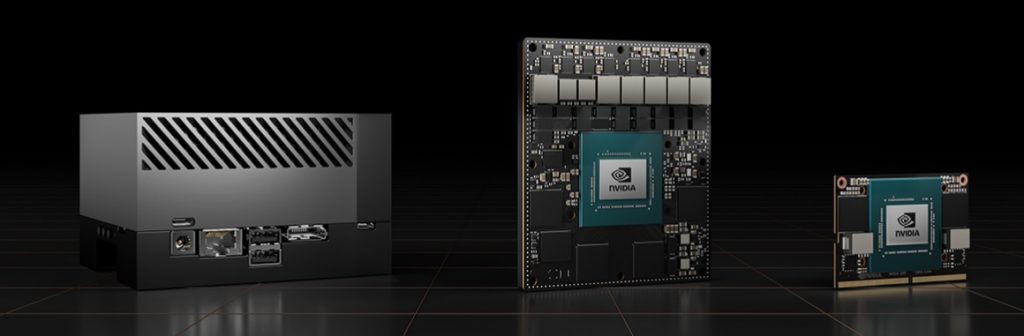

In case you’re not familiar with the subject, the NVIDIA Jetson series is basically a System-on-Module (SoM) package that combines everything an AI-based application needs including GPU, memory, connectivity, power management, interfaces, and more, into a single and easily accessible form factor.

As of the latest offerings, the Jetson AGX Orin dev kit, which made its way into the hands of developers in March this year, has seen 1 million developers and more than 6,000 companies building commercial products for the Jetson platform.

Featuring an Ampere-based GPU, Arm Cortex-A78AE CPUs, next-generation deep learning and vision accelerators connected by high-speed interfaces and faster memory bandwidth while augmented by multimodal sensor support capable of feeding multiple, concurrent AI application pipelines, the Jetson AGX Orin is confident to be the king of the edge AI market.

Regarding the release timeframe, NVIDIA has given us the month of July for Jetson AGX Orin production modules while the Orin NX modules are in September.

As for what form will they enter the market in, Jetson-based products are more flexible than you think as they can be servers, edge appliances, industrial PCs, carrier boards, AI software, and more. Cooling fans are optional depending on the presence of thermal constraints and there are plenty of brands ready to release them in the coming days and they are the following:

Taiwan-based NVIDIA Partner Network Brands (Including but Not Limited to)

- AAEON

- Adlink

- Advantech

- Aetina

- AIMobile

- Appropho

- Avermedia

- Axiomtek

- EverFocus

- Neousys

- Onyx

- Vecow

Worldwide-Brands (Including but Not Limited to)

- Auvidea

- Basler AG

- Connect Tech

- D3 Engineering

- Diamond Systems

- e-Con Systems

- Forecr

- Framos

- Infineon

- Leetop

- Leopard Imaging

- Miivii

- Quectel

- RidgeRun

- Sequitur

- Silex

- SmartCow

- Stereolabs

- Syslogic

- Realtimes

- Telit

- TZTEK

To wrap up, the Jetson platform also enables developers to solve some of the toughest problems by providing comprehensive software support capable of handling some of the most complex models in natural language understanding, 3D perception, multisensor fusion, and other areas.