Recently, NVIDIA has announced that over the last few months, big names within the tech, entertainment, and manufacturing are already on board for RTX PRO-powered servers, who have jumped in early to use this new class of enterprise data center infrastructure, powered by the RTX PRO 6000 Blackwell Server Edition GPU.

With AI now being an integral part of any industry that requires computing, it is now common to see that market segments that usually doesn’t overlap each other would come around and embrace the tech. For starters, Disney’s Josh D’Amaro says they’re using the tech to push immersive storytelling further, even powering a major update to the Millennium Falcon: Smugglers Run ride at Disneyland and Disney World, which will debut alongside ‘The Mandalorian and Grogu‘ movie. Foxconn’s Young Liu talked about using RTX PRO Servers for AI-driven automation in robotics, logistics, and EVs, while Hitachi’s Toshiaki Tokunaga said they’ll use them to boost AI reasoning, optimize infrastructure, and expand digital twin technology.

Over in automotive, Hyundai’s Heung-Soo Kim revealed they’ll tap the servers for digital twin testbeds to speed up factory construction and autonomous driving development. Pharma giant Lilly’s Diogo Rau noted that GPUs are helping them explore vast possibilities in medicine discovery, while SAP’s Christian Klein highlighted how pairing RTX PRO with SAP Cloud Infrastructure strengthens business AI without losing data control. And then there’s TSMC’s C.C. Wei, who sees RTX PRO Servers as a way to supercharge semiconductor manufacturing and streamline fab operations.

Beyond these giants, a whole range of companies are already experimenting with RTX PRO Servers. PEGATRON, Siemens, and Wistron are deploying them for factory automation and simulation, PubMatic is exploring new AI advertising use cases, Northrop Grumman is integrating them into aerospace workflows, and even Amdocs is using them to power AI agents for telecom customer experiences. Meanwhile, Cadence, Siemens EDA, and Synopsys are speeding up AI-driven chip design simulations.

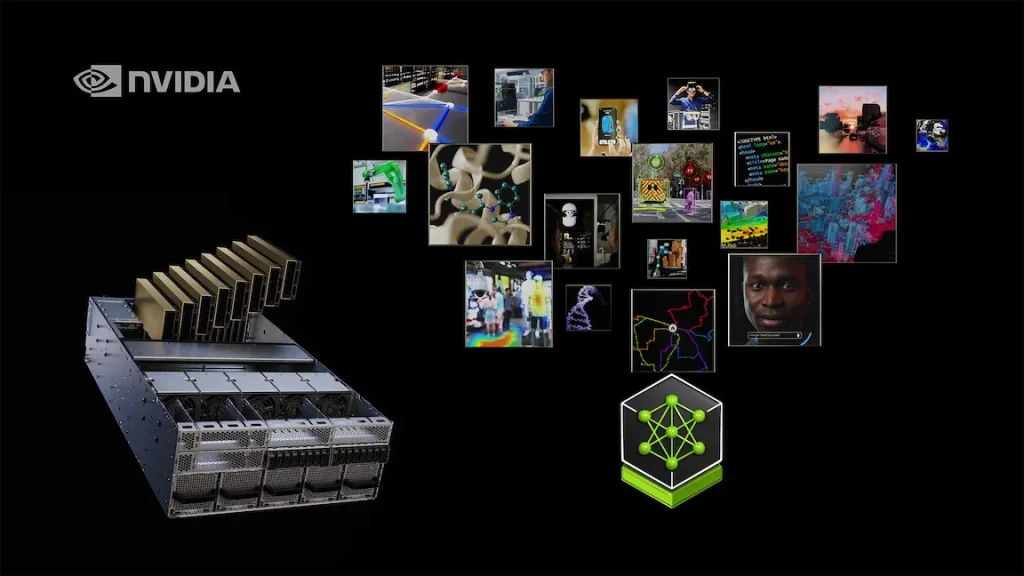

Performance-wise, RTX PRO Servers are built on the Blackwell architecture and are showing some serious gains. For example, NVIDIA says its Llama Nemotron Super reasoning model can get up to 3x better price-performance with the RTX PRO 6000 GPU compared to H100 setups. They’re also touting 4x faster digital twin and simulation workflows than L40S GPUs. And of course, flexibility will always be the keystone of industrial hardware, with the RTX PRO gear working natively with Windows, Linux, and leading hypervisors, and they’re fully backed by NVIDIA’s AI Enterprise software stack, which includes NIM microservices, Omniverse libraries, and Cosmos models for physical AI.

As for availability, enterprises can grab RTX PRO Servers from Cisco, Dell, HPE, Lenovo, Supermicro, and a long list of other OEMs like ASUS, MSI, GIGABYTE, Foxconn, and PEGATRON. Cloud providers are also getting in on it – CoreWeave and Google Cloud already have RTX PRO-powered instances live, with AWS, Nebius, and Vu rolling out later this year.