At COMPUTEX 2025, SMART Modular Technologies brought something different to the table. While a lot of booths were about RGB and new silicon, SMART Modular was focused on what really keeps high-performance systems on the enterprise side running — memory. And not just any memory, but CXL-based expansion cards and persistent memory modules designed to stretch server limits without breaking the bank.

Whether you’re running AI, big data, or in-memory databases, these solutions are made to give data centers more capacity, better bandwidth, and a lower total cost of ownership.

What’s CXL, and Why Does It Matter?

CXL (Compute Express Link) is a next-gen interconnect built on PCIe. It gives CPUs low-latency, high-bandwidth access to memory or other devices outside of the usual RAM slots. In simpler terms, CXL lets you expand and pool memory in ways that weren’t possible before — without having to swap out your CPU or build a new server platform.

SMART Modular is taking full advantage of this with two product categories: AIC (Add-in Cards) and CMM E3.S modules.

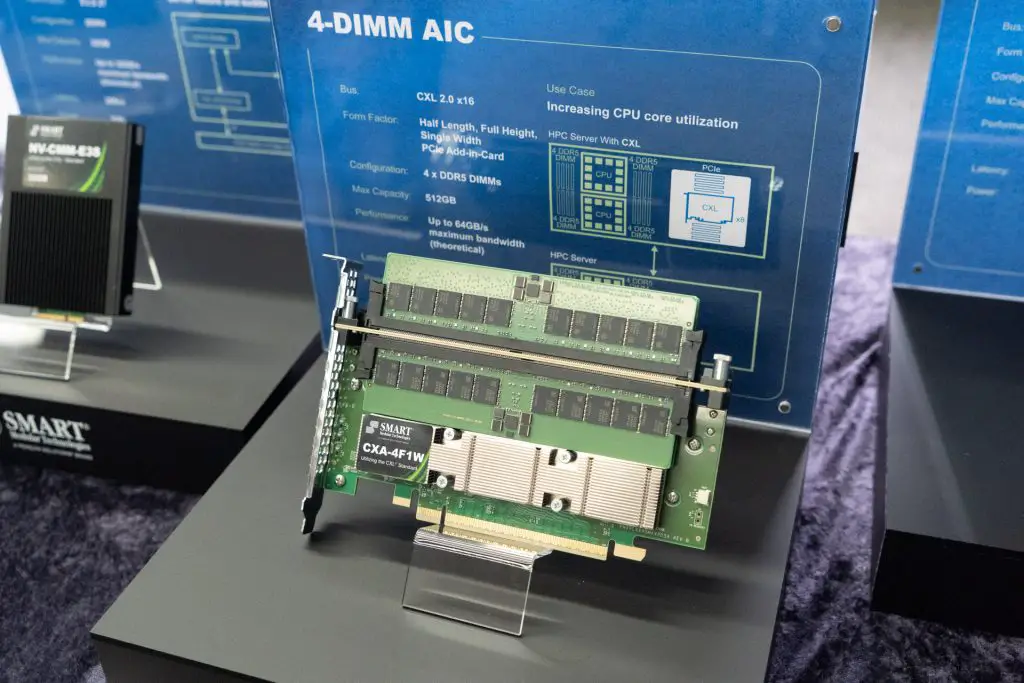

CXL AIC Expansion Cards: Easy Memory Scaling for Big Workloads

SMART’s CXL-based AICs are plug-and-play memory expansion cards that fit into regular PCIe slots — no server rebuilds required. These cards allow you to add hundreds of gigabytes, even terabytes of memory to your server with ease.

Here’s what makes them stand out:

- Huge Capacity Options: Choose between 4-DIMM or 8-DIMM setups. One card can handle up to 1TB of additional memory, depending on the configuration.

- Reliable Performance: Thanks to the CXL protocol, latency can be kept at around 310ns, which is way better than NVMe SSDs that can have inconsistent latency that goe between 100μs to 1ms. You also get extra memory channels to ease memory bottlenecks.

- Modular Scaling: Add just as much memory as your workload needs — no more, no less. Perfect for cloud, AI, or enterprise environments that constantly shift.

- Better Cost Efficiency: Instead of upgrading to new CPUs just to support more RAM, pair cheaper DIMMs with these AICs and get more capacity for less. SMART Modular estimates up to 40% savings for 1TB memory configurations.

- No Infrastructure Overhaul Needed: If your server has PCIe slots that support CXL, you’re ready to go. Just plug in and start expanding.

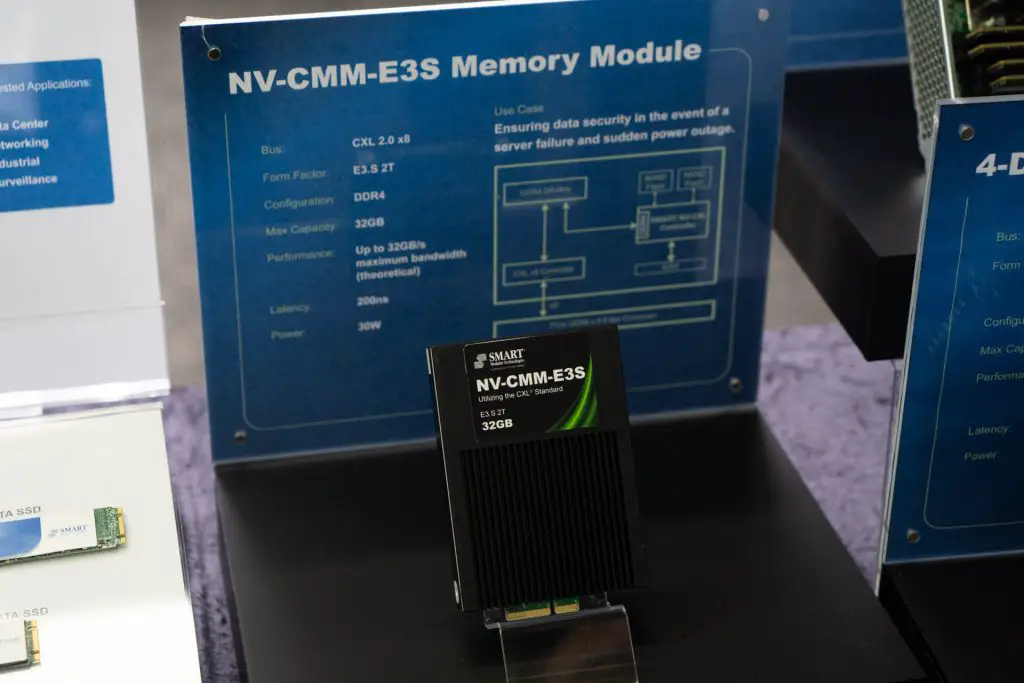

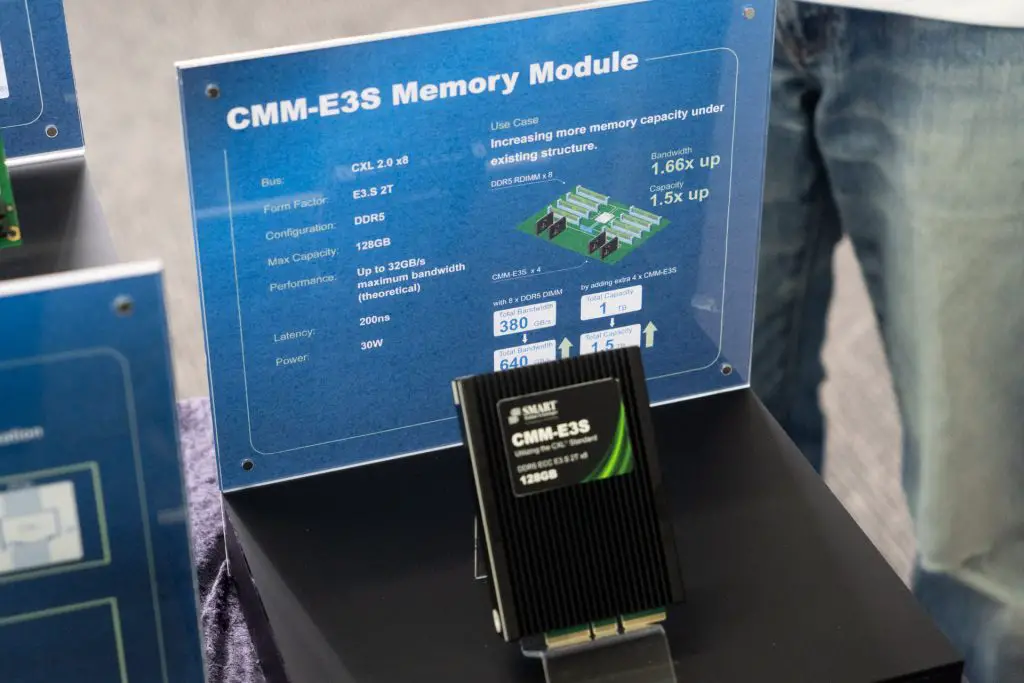

CMM E3.S Modules: Persistent Memory That Does More

On the persistent memory front, SMART Modular also showed off their CMM E3.S modules, including the NV-CMM, a non-volatile CXL memory module.

These are designed to deliver performance and data durability in a compact, server-friendly form factor:

- Fast and Persistent: The NV-CMM mixes high-speed DRAM with persistent flash storage and backup power, so data stays safe even during power loss. This is key for tasks like checkpointing, caching, and system recovery.

- Ideal for AI and Databases: The speed and reliability make these modules a solid fit for AI workloads, virtual machines, and high-performance databases.

- Modern Form Factor: Built in the compact E3.S 2T form, it’s easy to fit these into dense server environments, so they’re ready for the newest server architectures.

- Reliable and Scalable: Less downtime, faster data recovery, and smoother VM restarts all help keep operations running without hiccups.

Lower TCO by Smarter Memory Usage

One of the biggest advantages of these CXL solutions is the potential for cost savings. By pooling and disaggregating memory, you avoid wasting resources on “stranded” memory that often sits unused in traditional systems.

Final Thoughts

While the rest of the show was flashing lights and GPU power, SMART Modular dropped some of the most impactful tech at COMPUTEX 2025. Their CXL-based AIC cards and persistent memory modules offer a smarter, more scalable path forward for data centers dealing with heavy AI, big data, and high-speed compute workloads.

It’s not flashy, but it’s powerful — and in a world where memory is often the bottleneck, this could be a game changer for how modern servers are built and scaled.